Building an Agentic Coder from Scratch

Ben Houston • 6 Minutes Read • March 20, 2025

Tags: mycoder, agents, software-development, forward-js, talks

This is a write-up of my March 20, 2025 talk at the Ottawa Forward JS Meetup

In recent years, Large Language Models (LLMs) have transformed how we interact with software, fundamentally reshaping coding practices. I've recently explored these frontiers by creating MyCoder.ai, an open source agentic coding assistant that leverages the latest advancements in AI to automate coding tasks, self-debug, and significantly enhance developer productivity.

LLM Development: From Chat Completions to Agentic Workflows

In this section, we'll quickly cover the evolution of LLM APIs and capabilities that enabled agentic workflows.

Chat Completions (2022)

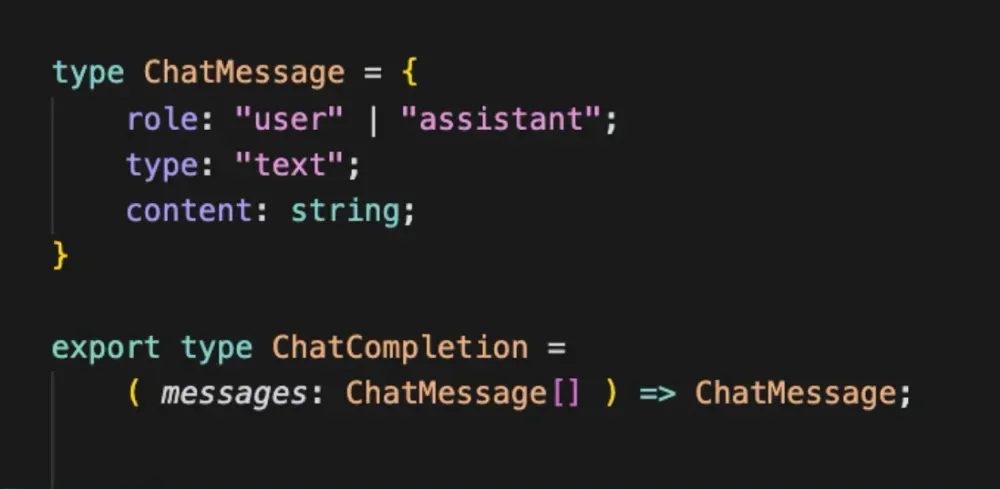

The original interface of ChatGPT introduced a simple but powerful paradigm: conversational completion. Users input alternating messages labeled "user" and "assistant," and the model predicts the next assistant message. This straightforward structure became the backbone of interactive AI experiences:

Structured Chat Completions

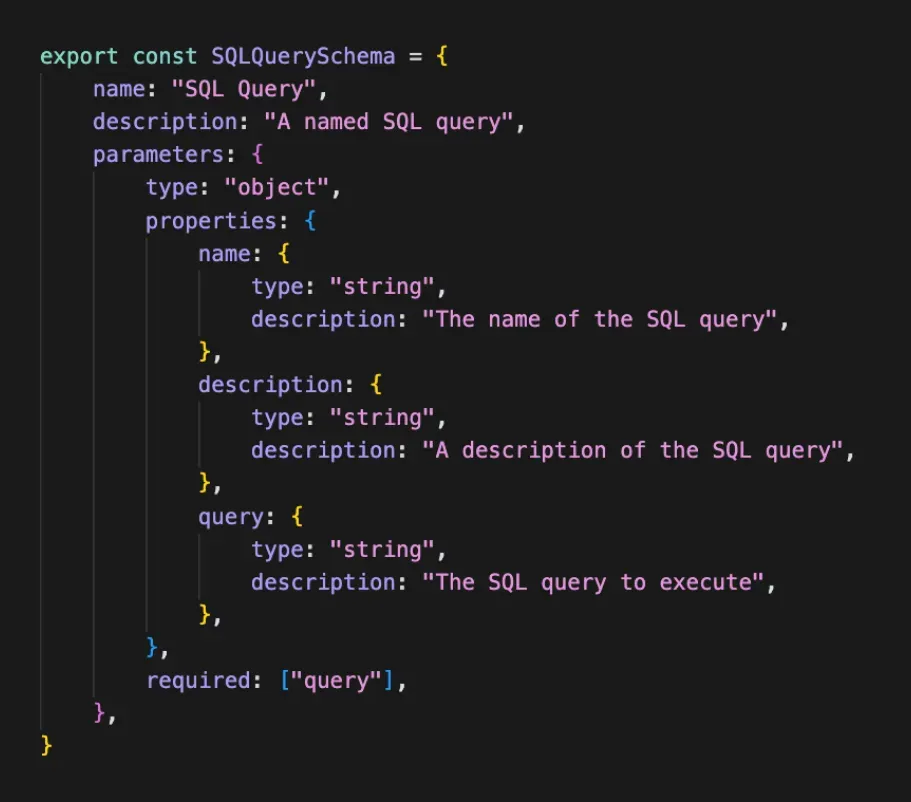

To achieve richer interactions, structured responses emerged. Developers began instructing models to return XML, JSX, and particularly JSON, due to its ease of parsing. In particular, LLM started to be trained on JSON Schemas allowed developers to explicitly define expected responses, enhancing reliability and programmatic integration.

Tool Use (2023)

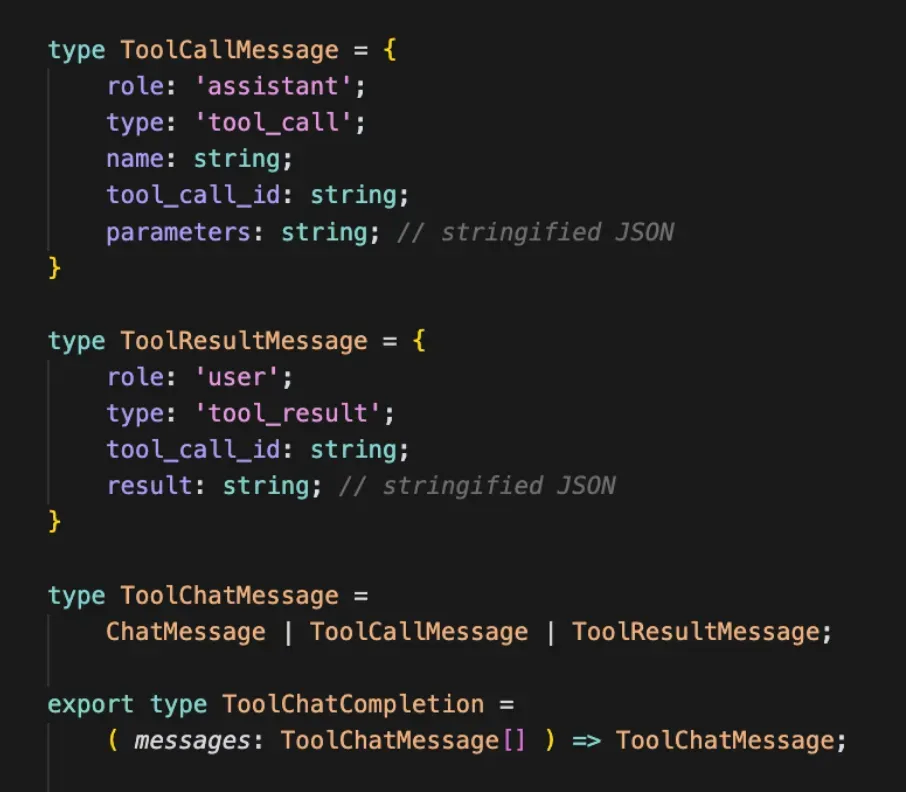

Structured responses naturally evolved into LLM "tool calling." Here, LLMs learned to invoke functions defined by JSON schemas, effectively creating loops of interactions:

Models such as ChatGPT-4 were explicitly trained for this structured interaction, setting the stage for sophisticated workflows.

Moving Towards Agents (mid-2024)

Early attempts like AutoGPT tried chaining multiple tools to form agents capable of autonomous workflows. However, limitations in LLM capabilities frequently resulted in agents becoming stuck or looping.

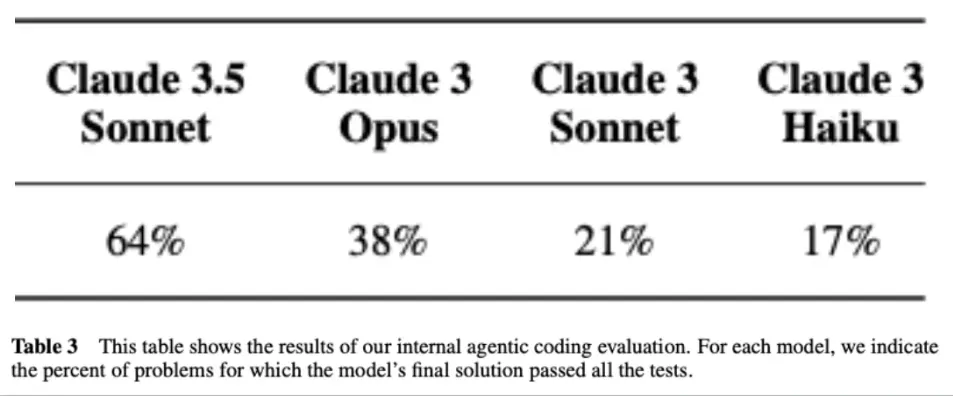

This changed significantly in mid-2024 when Anthropic’s Claude 3.5 and OpenAI’s o1 models explicitly trained on agentic workflows -- especially coding. This training dramatically improved agents' ability to autonomously execute complex tasks without getting stuck.

Building MyCoder.ai

Seed AI: A Minimal Agentic Approach

I began with a straightforward but ambitious prompt (paraphased a bit for this blog post):

"You are an agentic coder, you do what you're asked. You figure out context by examining README.md or standard files. Use available tools like git or gh CLI. Follow CONTRIBUTOR.md guidelines and ask for clarifications when needed."

The initial toolset included minimal commands:

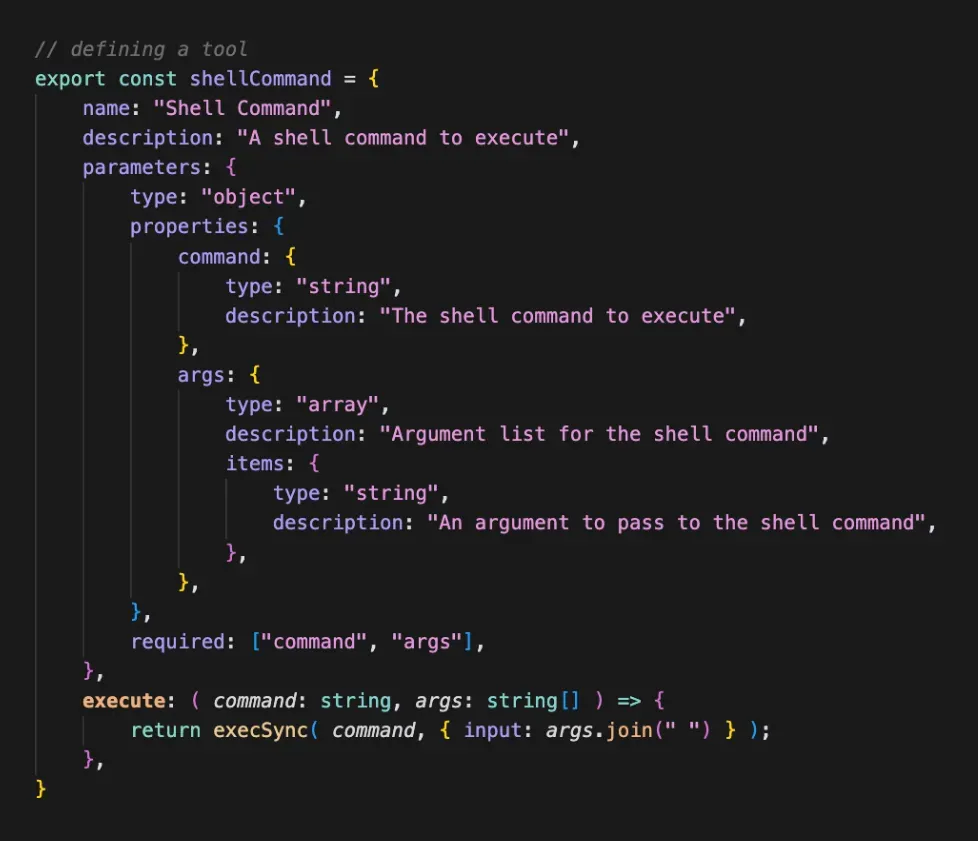

- shellExec for executing shell commands

- Basic file I/O via readFile and writeFile

- userQuery for interaction (because control is inverted in agentic workflows)

I purposely didn't implement any safety features, instead relying on the LLM’s inherent reasoning. I reasoned that since this agent can write code and execute on it, most of my attempts to put in safety features were likely just security theater.

Did It Work?

Surprisingly, yes! MyCoder.ai quickly started to self-code, debug, and even write its tests. The MVP relied primarily on shell commands, effectively navigating directories and utilizing CLI tools.

(I am pretty sure if I had only exposed the shell exec tool, I still would have had a basic auto-coder working. Everything else just duplicated what was already possible via the shell.)

However, several key issues surfaced:

- Interactive or long-running shell commands caused blocking.

- Inefficient handling of file I/O. I forced it to read and write whole files, no matter how small a portion was actually of interest.

- Large files overwhelmed the LLM context and blew up the auto-coder.

- Lack of capability to research independently.

Accelerating Development: Tools & Improvements

To address these problems, the toolset evolved:

- Smart Shell: Commands adaptively switched between synchronous and asynchronous modes, solving interactive/long-running process issues.

- TextEditor: Integrated efficient editing based on character ranges and sub-strings, directly influenced by Anthropic’s Claude Desktop.

- Fetch: Allowed autonomous external research via exposing most of node's fetch API.

- Browser Control (Playwright-based): Enabled automated web browsing to verify web UI correctness and debugging via DOM and console.

- SubAgents: Facilitated task delegation and complex multi-agent coordination.

Best Practices for Agentic Coding

Through iterative improvements, I identified several best practices:

- Comprehensive README.md: Clearly detailing project structure, purpose, and guidance significantly boosted agent efficiency. This is essentially distilled knowledge caching in a conventionally decided format.

- Robust Testing and Validation: Your projects should have linting, unit, and integration tests to triangulate that the output of the agentic coder is correct. Hallucinations are of course possible, but these triangulation techniques greatly reduce them.

- Pre-commit Hooks: Integrating validations into commit workflows ensures that the agent's code will be forced to pass these.

Pushing Efficiency Further

MyCoder.ai matured rapidly:

- NPM installable (npm install -g mycoder) streamlined accessibility.

- GitHub integration automated issue creation, branching, commits, and PR management.

- GitHub Actions integration enabled full autonomy, responding to triggers in PR/issue comments.

- Anthropic token caching reduced operational costs by over 80%.

The integration of GitHub Actions resulted in impressive productivity -- over 200 autonomous commits in a single day.

Leaning into Agentic Coding

Agentic coders like MyCoder.ai excel at framework migrations (e.g., Jest to Vitest) and adjusting to new development stacks rapidly. Libraries and frameworks choices carry less technical debt as the cost of migration plummets.

You want to lean into what the LLM was trained on. It is much easier to stay in its lane (it just works), that to force it to do things it wasn’t trained on. For example, agentic workflows AutoGPT didn’t really work until Claude 3.5 & o1 came which had been trained on agentic workflows.

Looking ahead, agentic coding promises self-maintaining systems that require minimal human oversight -- dramatically reshaping software development and reducing traditional programming costs to near-zero.

What's Next?

MyCoder.ai continues to evolve with ongoing enhancements like deeper metacognition, code indexing, and tighter integration with development tools. It's a fascinating journey, transforming how we conceptualize coding itself.

Feel free to reach out with feedback or questions -- or join our Discord community at MyCoder.ai.